Learning Curves and Rabbit Holes

This learning project has been a fascinating trip to date. In addition to incremental learning about Python and data science, I have learned a ton about how I work. These lessons have been valuable and transferable to other domains like work. An increased self-awareness in combination with the satisfaction of seeing progress with python coding has greatly contributed to the sustainability of the project to date. I am surprised and excited that my interest remains at a high level after 7-ish weeks.

Oddly, the facet of the project I dislike most is the documentation. After muddling through hours of tutorials and exercises, the last thing I want to do is stop and write about it. I either want to continue chasing whatever momentum that I have generated or I am so frustrated with where I find myself that documenting the frustration is the last thing I want to do.

I have decided to blog intermittently and thoughtfully. Now that I am over the initial step of being introduced to the foundations of Python, and am into building different things, I can see the value in documentation.

Prototype 0: Build a web scraper with Selenium

Using the Nova Scotia Food Establishment Inspection Data as the desired sata source and a tidy .csv file as the desired output, I set to work on applying what I have learned to date. What started with a tutorial from Automate the Boring Stuff with Python soon expanded to include a dozen tabs for various Youtube tutorials and StackOverflow threads.

Three evenings of intermittent learning has yielded some success and a plethora of rabbit holes. I have been coding using basic a basic notepad application and IDLE. I soon realized the benefit of other tools, but which tools? I have attended workshops using Anaconda, PyCharm, Jupyter as a standalone and had no clue which to use when. In addition to this, I want to document my learning from a code perspective, which had me wondering about GitHub and when / how this application fits into my process. Then, I remembered this damn blog and quickly recalled why I hated documenting this process to date.

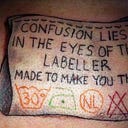

I looked up from my screen to see 25 minutes had passed where I switched directions from trying to figure out how to export scraped web material into a .csv file (note to self: watch introduction to Pandas tutorial before bed) to a half dozen open tabs on Anaconda, PyCharm, intro to GitHub….yeesh! I felt like my learning curve turned into some nightmare of knowledge fractals and I couldn’t keep up with the rate of growth.

Step 1: I installed Anaconda and will use Jupyter notebooks within Anaconda for the next 3 months as an experiment. With the Lighthouse Labs 21 day data challenge starting this week, it is time to start trying out context specific tools.

Step 2: Github learning curve. I am pausing this offshoot project to late March when I have vacation booked. As a repository, this step is not mission critical to maintaining the momentum of my learning efforts. I have seen examples of GitHub pages and find the central repository of code, web pages and potentially any writing in one location to be very appealing. Despite the appeal of potential efficiency, this is a second tier goal. Python coding and data science skill building are the number one goal for 2021.

Step 3: Bring on the Pandas! I have ascertained that you can export content acquired from a web scrape into a .csv file via the pandas library. This week, I figure out how. This step will be tested in Anaconda, using the web scraper project.

Let us see where I am with this proposed course of action come next Sunday.